A Triveritan analysis of Lee Kwan Yew’s statement that in multiethnic, multireligious societies, all politics is identity politics.

This is a strong empirical claim with clear testable predictions. The exact Lee Kuan Yew quote, from his 2005 Der Spiegel interview: “In multiracial societies, you don’t vote in accordance with your economic interests and social interests, you vote in accordance with race and religion.”

TRIVERITAN ANALYSIS

Claim under evaluation: In multiethnic, multireligious societies, voting behavior is determined primarily by racial and religious identity rather than by economic or social interest. (Lee Kuan Yew, Der Spiegel, 2005.)

Claim type: Empirical generalization about political behavior in diverse societies. This is not a forward-looking forecast. It is a claim about an observable regularity, which means the E dimension should be rich and the framework should perform near its design strength.

LOGICAL VALIDITY (L): 78

The claim has the structure of a behavioral law: given condition X (multiethnic, multireligious society), behavior Y (identity voting) predominates over behavior Z (economic/social interest voting). The logic is clean. The implicit causal mechanism is that group identity provides a more reliable heuristic for predicting political outcomes than economic calculation does, because in a diverse society the question “who gets what” is inseparable from “which group controls the distribution.” The voter who votes his economic interest in a homogeneous society is already voting his identity, because everyone shares it. When identities diverge, the voter must choose which heuristic to trust, and identity is the stronger signal because it answers the prior question: who will be making the decisions about my economic interests?

This is logically tight. It does not commit the fallacy of confusing correlation with causation, because the mechanism is specified: identity determines which coalition controls the state, and control of the state determines economic distribution. Voting your identity is voting your economic interest one level up.

Two logical vulnerabilities prevent a higher score. First, the claim as stated is absolute: “you don’t vote in accordance with your economic interests… you vote in accordance with race and religion.” The word “don’t” leaves no room for mixed motivation. A more precise formulation would be “identity dominates economic interest as the primary determinant.” Lee knew this, of course. He was making a practical observation for public consumption, not writing a journal article. But the logical structure of the absolute claim is slightly weaker than the probabilistic version.

Second, the claim does not specify a threshold for what counts as “multiracial” or “multireligious.” Singapore with four major groups? The United States with shifting coalitions? A society with 95% one group and 5% another? The claim’s scope conditions are underspecified.

These are real but modest weaknesses. The core logical architecture is sound.

MATHEMATICAL COHERENCE (M): 82

This is where the claim distinguishes itself from most political commentary. It makes quantitative predictions that can be checked. If Lee is right, we should observe: (1) high correlation between group demographic share and vote share in multiethnic constituencies, (2) that correlation should be stronger than the correlation between economic indicators and vote share, and (3) the effect should be observable across multiple countries, time periods, and electoral systems.

The data is remarkably cooperative.

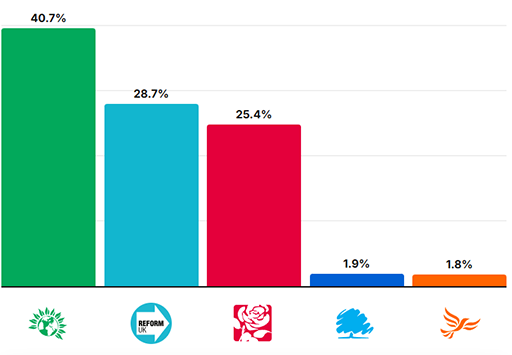

Gorton and Denton, 2026. Muslim population: 28% of constituency. Green Party vote share: 40.7%. The Green Party campaigned explicitly on Gaza, against Islamophobia, in Urdu and Bengali. The constituency is geographically segregated: Pakistani Muslim voters concentrated in Longsight and adjacent wards (formerly Manchester Gorton); Denton is overwhelmingly white British. Pre-election polls had the Greens at 27-32%. The actual result overperformed every poll. The near-perfect alignment of Muslim population share with the floor of Green support (the additional 12-13 points coming from tactical anti-Reform voting by non-Muslim progressives) is exactly what the Lee model predicts: the identity bloc votes as a bloc, then attracts additional support from ideological allies. The identity vote is the foundation; everything else is decoration.

The observation that the Green Party’s cultural liberalism is “fundamentally at odds with Islamic social conservatism” makes the mathematical case stronger, not weaker. If voters were voting economic or social interests, socially conservative Muslims would not be voting for a party that supports drug liberalization and gender ideology. They are voting identity. The policy alignment is on one axis only: the axis that maps onto group identity (Gaza, Islamophobia, community recognition). Every other policy dimension is irrelevant to the voting calculus. This is precisely what Lee predicted.

United States, 2024. Black voters: 83% Harris, 15% Trump (Pew validated data). This has been stable for decades: Black voters supported the Democrat by 80%+ in every presidential election since 1964. Economic conditions, candidate quality, specific policy platforms vary enormously across these elections. The constant is racial identity. Even in 2024, when young Black and Hispanic men were deeply pessimistic about the economy and retrospectively approved of Trump’s economic management, 83% of Black voters still voted Harris. Economic interest pointed one direction; identity pointed the other. Identity won.

Hispanic voters are the partial exception that proves the rule. Their identity as a voting bloc has been less cohesive (linguistic and national-origin diversity within the category), and their voting has been correspondingly less monolithic. When identity cohesion weakens, economic voting increases. This is exactly the mathematical relationship Lee’s claim predicts: identity voting strength correlates with group homogeneity.

The quantitative literature confirms this. The ScienceDirect study on ethnic voting across multiple countries found that groups with greater internal homogeneity show higher levels of ethnic voting. The Yale/ISPS study found that racial identity explains 60% of the variation in district-level voting patterns in the US, while geography explains only 30%. The Cambridge study of racially polarized voting found that Black voters consistently choose Democratic candidates across all districts regardless of local context, while white and Hispanic voters show more geographic variation, precisely tracking the group-homogeneity prediction.

Kenya. Voting patterns described in the literature as “ethnic arithmetic,” with coalitions forming along tribal lines. In-country Kenyans show strong co-ethnic voting; diaspora Kenyans significantly less so. This is a clean natural experiment: same ethnic identity, different social context. The diaspora voters have been removed from the identity-reinforcing social environment. Their ethnic voting drops. The mechanism Lee identified (identity as social heuristic in diverse environments) is supported by the observed decay of that heuristic when the social context changes.

Lebanon. The constitutional system literally allocates political power by religious sect: President is Maronite, Prime Minister is Sunni, Speaker of Parliament is Shia. The system exists because, over a century of experience, the Lebanese concluded that Lee’s observation was inescapable and the only way to maintain stability was to formalize it. Lebanon’s 1932 census has never been updated because updating it would change the power balance. You do not freeze a demographic census for 94 years unless identity voting is the dominant political force and everyone knows it.

Singapore itself. Lee’s own country provides the control case. He imposed racial quotas in public housing, mandatory Group Representation Constituencies requiring multiethnic slates, English as the lingua franca, and aggressive integration policies. These are the interventions of a man who believed his own observation and was trying to manage its consequences rather than pretend it was not true. Singapore’s leaders to this day reiterate that “identity politics has no place in Singapore,” which is an admission that without active suppression, identity politics would dominate Singapore just as it dominates everywhere else.

The mathematical coherence is strong. The predicted correlations exist, they hold across countries and time periods, they hold at the correct magnitudes, and the exceptions (diaspora Kenyans, variable Hispanic cohesion) fall precisely where the model predicts they should.

EMPIRICAL ANCHORING (E): 85

The empirical evidence is extensive, cross-cultural, and spans multiple methodologies.

Gorton and Denton 2026: Green vote tracks Muslim demographic share, overriding ideological incompatibility. Democracy Volunteers reported family voting at 15 of 22 polling stations, a social-pressure mechanism that only works within identity networks.

US presidential elections 1964-2024: Black voting bloc stable at 80%+ Democrat across vastly different economic conditions, candidate profiles, and policy platforms. The most powerful single predictor of American voting behavior remains race.

Kenya: ethnic census model of elections well-documented across multiple election cycles, with ethnic identity outperforming economic indicators as predictor of vote choice.

Lebanon: formal constitutionalization of sectarian identity voting, with the system enduring for over a century across colonial rule, civil war, and reconstruction.

India: BJP’s rise tracks Hindu identity mobilization; Muslim voting patterns in India cluster around whichever party is perceived as protecting Muslim interests, regardless of economic platform.

Malaysia: UMNO/Malay, MCA/Chinese, MIC/Indian political structure explicitly organized along racial lines for decades.

Qatar: Experimental evidence from conjoint survey shows strong cosectarian candidate preference even in elections with no distributional stakes, eliminating the clientelism explanation.

Partial counterexamples:

Hispanic voters in the US 2024: shifted significantly toward Trump on economic grounds, breaking from the identity-voting pattern. But Hispanics are the least internally homogeneous “racial” category in American politics, encompassing Cuban Americans, Puerto Ricans, Mexicans, and others with very different national identities. When measured by actual nationality rather than the artificial census category “Hispanic,” identity voting reasserts itself: Cuban Americans voted 70% Trump; Puerto Ricans voted majority Harris.

Diaspora Kenyans: weaker ethnic voting than in-country Kenyans, consistent with the model (removal from identity-reinforcing social context).

Class-based voting in homogeneous societies: Scandinavian countries, Japan, and other ethnically homogeneous nations show strong class-based voting, exactly as Lee predicted. His claim is specifically about multiethnic societies. In homogeneous societies, identity is not a variable, so economic interest becomes the primary differentiator. The claim’s scope condition holds.

The claimed counterexample that most matters is the one that does not exist: there is no multiethnic society in which economic voting consistently dominates identity voting over multiple election cycles. Individual elections can show economic factors rising in importance (US 2024 Hispanic shift), but these are fluctuations around an identity baseline, not replacements of it. The baseline reasserts itself.

The empirical record is deep, cross-cultural, longitudinal, and consistent. The exceptions are predicted by the model. This is about as good as social science evidence gets.

COMPOSITE: 81.7

L = 78, M = 82, E = 85.

This is the highest-scoring political claim we have evaluated. The Lee claim is an observable regularity with sixty years of cross-cultural evidence and a clean causal mechanism. The score reflects genuine epistemic strength. The claim has a logically coherent mechanism (identity as prior heuristic for group interest), produces quantitative predictions that are confirmed across multiple independent datasets, and is empirically anchored in evidence spanning four continents, multiple electoral systems, and decades of observation.

The Gorton and Denton confirmation is particularly clean because it involves a party (the Greens) whose policy platform on everything except the identity-salient issues (Gaza, Islamophobia) is diametrically opposed to the social conservatism of the Muslim community that elected them. If economic or social interest were the primary driver, socially conservative Muslims would not be voting for a party that wants to liberalize drugs and whose cultural values are, in the words of the UnHerd analysis, “fundamentally at odds with Islamic social conservatism.” They voted Green because the Greens were the party that most visibly championed the identity of the Muslim community. The policy disagreements on every other dimension were irrelevant.

What the score does not mean: It does not mean identity voting is the only factor. It does not mean it is equally strong in all contexts. It does not mean it cannot be managed or mitigated (Singapore demonstrates that it can, with sufficient political will and authoritarian capacity). It means that in multiethnic, multireligious societies operating under democratic electoral systems, identity is the primary determinant of voting behavior, dominating economic and social interest as the organizing principle of political coalitions. This claim warrants assent at a high confidence level.

Lee Kuan Yew told the truth. The math confirms it. The evidence, from Manchester to Nairobi to Beirut to Washington, confirms it again. And the people most committed to denying it are the ones building their political strategies on the assumption that it is true.

DISCUSS ON SG